Paul Debevec inside his geodesic light box at the USC Institute for Creative Technologies in Playa Vista.

The motion-capture technology that made “Avatar” one of the most expensive films in history will, in the not too distant future, become the tools of independent film-makers on shoestring budgets.

As computer graphics algorithms improve and costs fall, shooting a film with lights, cameras and human actors may be considered a quaint and expensive luxury.

“There will be a cross-over point,” said Paul Debevec, a research director at the Institute for Creative Technologies in Playa Vista. “I think eventually it will only be the big-budget movies that will be able to go on location with real actors.”

The ICT is a satellite research facility affiliated with the University of Southern California and funded by the Department of Defense. The goal is to improve military training exercises with immersive media experiences like virtual reality and photorealistic digital humans.

But the institute also collaborates with game developers and special effects studios from Hollywood and has helped create some of the most visually arresting films of the past two decades, including The Matrix, The Curious Case of Benjamin Button, Gravity and Avatar.

Debevec’s PhD thesis was the inspiration for the bullet-dodging scene in The Matrix

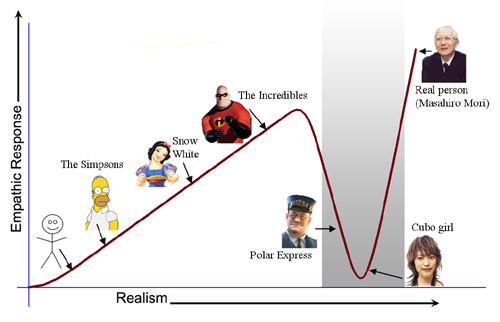

Debevec’s work with light and three-dimensional scanning is pushing digital animation across the “uncanny valley,” or that uncomfortable feeling you get when you stand next to a hyper-realistic statue of David Beckham at a wax museum or watch Tom Hank’s character in the Polar Express.

His team has already figured out how to digitally imitate the way light falls on skin. The next challenge is to imitate the way skin moves and eyes stare. Cracking those codes will lead to more realistic video game characters and storytelling possibilities for film-makers.

Check “Digital Emily” from 2008

And ICT’s collaboration with Activision in 2012 called “Digital Ira”

Debevec foresees a future where most films are made like Gravity, where George Clooney and Sandra Bullock were shot inside a giant light box and their faces were inserted into an entirely digital environment.

As computer speeds and algorithms improve, film studios may one day be able to forgo the cost of hiring A-list actors like Clooney and Bullock. Instead, they may license the rights to their scanned faces and paste that data onto a digital puppet animated by the movements of another actor moving in front of a motion-capture camera.

The technology could even bring actors back from the dead. Peter Jackson’s visual effects studio Weta Digital, which has partnered with Debevec on several films, was able to reanimate Paul Walker after the actor died in the midst of filming for Furious 7, which premieres this weekend.

“I like the idea that film-makers have as much creative control as they want on the screen,” Debevec said. “They could have every character in the film look exactly the way they want them to and not worry about if this actor becomes available or that actor becomes available.”

HOW THEY MADE “THE CURIOUS CASE OF BENJAMIN BUTTON”

Studios regularly scan their actors to help animators create more realistic action sequences that are too dangerous even for stunt doubles. As digital avatar technology improves and prices fall, scanning actors may become a common insurance practice for studios.

Of course, nobody is going to puppeteer a digital avatar of Paul Walker better than Paul Walker. But Debevec believes in actors’ ability to mimic other people’s mannerisms. The actor that played Richard Nixon in the 2008 film Frost/Nixon did an excellent job, but he looked nothing like the real president, Debevec said.

Last year, the ICT scanned President Barack Obama’s face by photographing him from multiple angles under several lighting conditions. A computer algorithm constructed a three-dimensional, photographic rendering that was used to print a 3-D bust for the National Portrait Gallery.

Debevec believes that same data set could be used 100 years from now to create a virtual Barack Obama character in a film.

It could also be in actors’ financial interest to have their faces scanned. Twenty years from now, an actor may audition for a role that requires a flash back to when character was much younger. Possessing their digital scan could help actors negotiate higher fees, since the studio would not have to higher a younger actor for the same character.

They could also chose not to age — at least on screen.

Once this technology becomes as inexpensive as cutting a film on Final Cut, studios could conceivably create characters that are composites of several facial and body scans. An actor in a motion capture suit could then animate the avatar in the same way voice actors bring cartoons to life with their words and personality.

With this type of virtual film-making, actors could be judged on skill alone, since beauty (in the real world) would no longer have any value.

The studios would also save money on positions like make up artists, lighting technicians, physical set builders and casting directors, since modifying actors and their surroundings would be as simple as toggling a dial on a screen.

“The first time you do anything, it costs a lot of money,” Debevec said. “Hollywood doesn’t intentionally look for the most expensive way to do something.”